For example, two statements are parallel − Ram wanted car.

It infers p(a1,a2,…) from assertion of S 0 and p(b1,b2,…) from assertion S 1. For example, two statements show the relationship − Ram fought with Shyam’s friend. It infers that the state asserted by S 1 could cause the state asserted by S 0. For example, two statements show the relationship result: Ram was caught in the fire. It infers that the state asserted by term S 0 could cause the state asserted by S 1. We are taking two terms S 0 and S 1 to represent the meaning of the two related sentences − Result Hebb has proposed such kind of relations as follows − As we know that coherence relation defines the possible connection between utterances in a discourse. To achieve the coherent discourse, we must focus on coherence relations in specific. Lexical repetition is a way to find the structure in a discourse, but it does not satisfy the requirement of being coherent discourse. These discourse markers are domain-specific. Discourse marker or cue word is a word or phrase that functions to signal discourse structure. In supervised discourse segmentation, discourse marker or cue words play an important role. On the other hand, supervised discourse segmentation needs to have boundary-labeled training data. The earlier method does not have any hand-labeled segment boundaries. On the other hand, lexicon cohesion is the cohesion that is indicated by the relationship between two or more words in two units like the use of synonyms. These algorithms are dependent on cohesion that may be defined as the use of certain linguistic devices to tie the textual units together. In the example, there is a task of segmenting the text into multi-paragraph units the units represent the passage of the original text. We can understand the task of linear segmentation with the help of an example. The class of unsupervised discourse segmentation is often represented as linear segmentation. The algorithms are described below − Unsupervised Discourse Segmentation In this section, we will learn about the algorithms for discourse segmentation. It is quite difficult to implement discourse segmentation, but it is very important for information retrieval, text summarization and information extraction kind of applications. Discourse segmentations may be defined as determining the types of structures for large discourse.

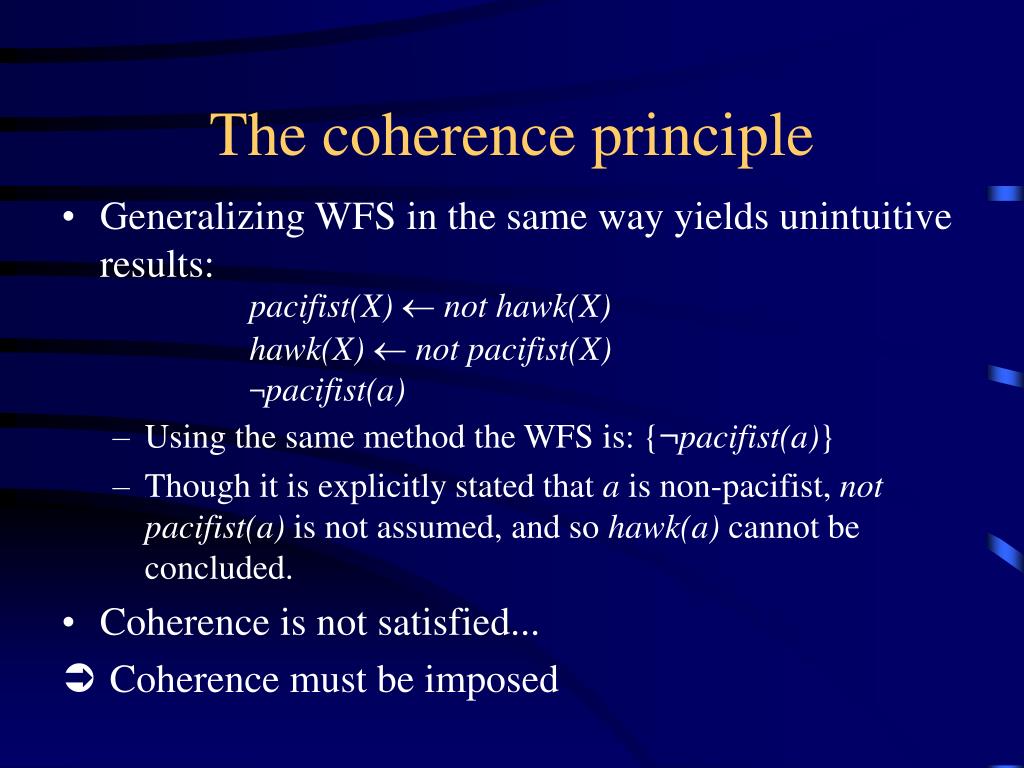

The answer to this question depends upon the segmentation we applied on discourse. Discourse structureĪn important question regarding discourse is what kind of structure the discourse must have. Such kind of coherence is called entity-based coherence. Relationship between entitiesĪnother property that makes a discourse coherent is that there must be a certain kind of relationship with the entities. For example, some sort of explanation must be there to justify the connection between utterances. This property is called coherence relation. The discourse would be coherent if it has meaningful connections between its utterances. The coherent discourse must possess the following properties − Coherence relation between utterances It is because these sentences do not exhibit coherence. The question that arises here is what does it mean for a text to be coherent? Suppose we collected one sentence from every page of the newspaper, then will it be a discourse? Of-course, not. Coherence, along with property of good text, is used to evaluate the output quality of natural language generation system. Concept of CoherenceĬoherence and discourse structure are interconnected in many ways. These coherent groups of sentences are referred to as discourse.

Actually, the language always consists of collocated, structured and coherent groups of sentences rather than isolated and unrelated sentences like movies. If we talk about the major problems in NLP, then one of the major problems in NLP is discourse processing − building theories and models of how utterances stick together to form coherent discourse. The most difficult problem of AI is to process the natural language by computers or in other words natural language processing is the most difficult problem of artificial intelligence.

0 kommentar(er)

0 kommentar(er)